Do you know why ChatGPT understand context within a sentence?

For example, when you say "Michael Jordan is a basketball player. Who is Michael?" and the model will respond "Michael Jordan is a basketball player..." and then continue with what the model knows about "Michael Jordan", and if you let the model keep talking, then you will start to see hallucination.

The secret? ChatGPT has something called an induction head.

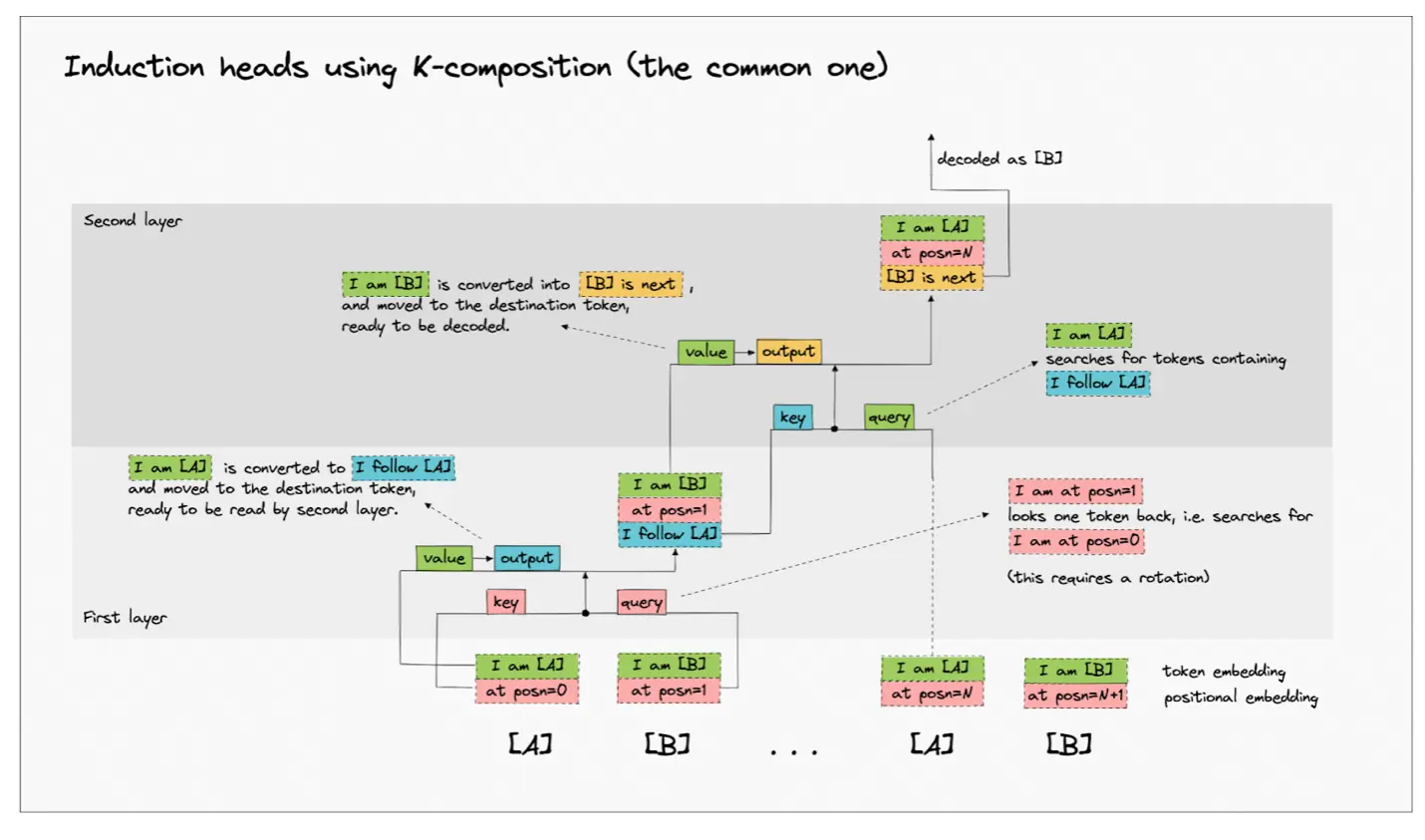

An induction head is an algorithm that can attend to the token immediately after the copy of the current token.

For example, when you say "Michael_1 Jordan_1 is a basketball player. Who is Michael_2?", the token of "Michael_2" will attend to "Jordan_1". This is why, when you ask "Who is Michael?", the model knows that you are referring to "Michael Jordan".

Fun fact: In GPT-2 Small, Layer 5, 6 and 7 has induction heads! Specifcially, heads 5.1, 5.5, 6.9, 7.2, and 7.10

For more, on how an inducation head works, see the diagram "Induction heads using K-composition

Diagram is by Callum McDougall: https://www.lesswrong.com/posts/TvrfY4c9eaGLeyDkE/induction-heads-illustrated

#largelanguagemodel