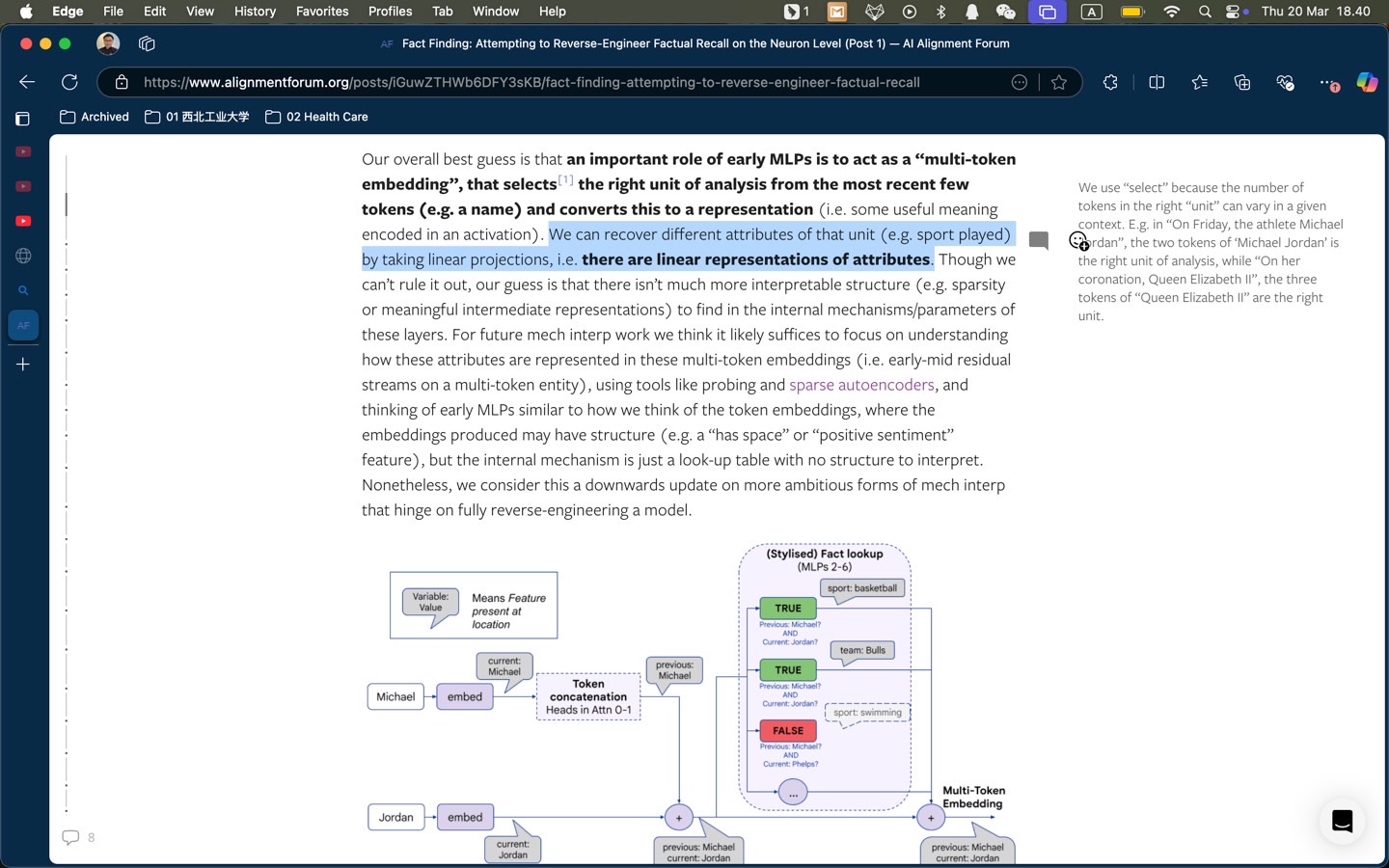

Multilayer Perceptron (MLP) in the Transformer model is where the model stores the facts (well, sort of). In other words, if the input is "Michael Jordan plays the sport of ____". Then, with some magic called linear projections, we can recover the token "basketball".

"Fact Finding: Attempting to Reverse-Engineer Factual Recall on the Neural Level (Post 1). by Google DeepMind.